Tracking¶

Tracking is the concept of associating a detection from a frame or image to a detection in another frame or image. A “Track” is a series of detections each representing multiple looks at the same underlying real-world object.

Tracking methodology considerations¶

The tracks are generated by joining detections into tracklets, or an associated group of detections. Iterations are performed to then join tracklets into larger tracklets until confidence is reached that all tracklets are now well-formed tracks. The amount of loops is discussed under “Data Considerations”. For the purposes of implementation symetry, the first iteration casts detections to each be a tracklet containing 1 detection.

Each stage of the loop executes a graph-based algorithm that solves which tracklets to join based on the weights associated with each edge in the graph. More than 2 edges can be joined in 1 iteration of the graph edge contraction. There are an infinite number of ways to calculate weights of each edge. OpenEM supports edge weight methods using ML/AI or traditional computational methods.

An example tracker using Tator as a data store for both detections and tracks is located in scripts/tator_tracker.py.

Recursive graph solving overview¶

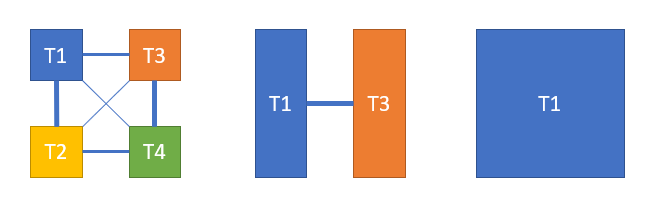

The graph edge contraction above shows the contraction of four tracks into one via iterative contraction. The weights of the graph are qualatatively shown as the width of the line connecting each graph edge.

Simple IoU¶

Graphical depiction of intersection over union (IoU). Adrian Rosebrock / CC BY-SA (https://creativecommons.org/licenses/by-sa/4.0)

A tracker starts with a list of detections. In some video, specifically higher frame-rate low-occlusion video, the IoU of one detection to another can be highly indicatative the object is the same across frames. The IoU weighting mechanism can start to have errors on long overlapping tracks. As an example picture looking across a two-way street, assuming a well-trained object detector it is probable almost all frames could capture two oncoming cars passing each other. However, an IoU tracker can misjudge truth by looking only at overlap of detections, resulting in ‘track swappage’. In this case rather than have 2 cars, one traveling left, one traveling right, the tracker may track 2 cars each driving towards the middle and turning around.

Directional¶

Directional tracking generates edge weight based on the similarity of velocity between two tracks. In the example car example above, a the IoU strategy may be limited to only create track lengths up to N frames. Given N frames, each IoU-based track can have a calculated velocity and edge weights are valued based on the simularity of velocity between two tracks. The directional model can add fidelity to an IoU tracker if objects have defined motion patterns to bias association based on the physical characteristics of the object being tracked.

Directional tracking can be difficult for objects that move erradically or ultimately become occluded for long periods of time. Directional tracking also does not help identify tracks that leave and return the field of view within a recording.

Siamese CNN¶

This method compares two tracklets that each have no more than 1 detection. The appearance features of each detection or series of detections are extracted and compared. The simularity of each detection is used as an edge in the graph. This method of edge weight determination can help recognize if detections are the same even if no motion or overlap is present between multiple looks at the object. Using the car example above, the appearance features of a red car would match it to a similar look at the same red car. This method can run into issues for objects that change or transform their appearance. Using the car example above, an exposed weakness to this approach would be if one car was a convertible in the process of folding in the roof.

This method requires a trained model.

Multiple stage approach¶

Each one of these stages can be used in conjunction with another. The reference tracker shows the concept of using different methods based on the current iteration of the network. This fusion approach can be useful to

Training considerations¶

Of the currently supported reference methods, the Siamese CNN is the only method requiring training. <TODO: insert link to how to train siamese data>.

Bootstraping the tracker can be useful in the generation of training data. Utilizing the IoU or Directional tracker methods can generate data to be reviewed by annotators. After this review, data can be used to train the Siamese CNN. This can result in more training data for associating detections faster, than having annotators start by manually associating detections.

Tracker strategy¶

The --strategy-config argument to tator_tracker.py expects a yaml file that allows for the configuration of the tracker. The options above

are exposed via the yaml syntax. An example with commentary is provided below:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | method: iou #One of 'hybrid', 'iou', or 'iou-motion' args: #keyword arguments to the underlying strategy initializer (tracking/weights.py) threshold: 0.20 # Sets the IoU threshold for linkage extension: # Optional method: linear-motion #linear-motion (TODO: add additional methods, like KCF, etc.) frame-diffs: [1,16,32,128] # List of what frames to run the graph solver on class-method: # optional external module used to classify tracks pip: git+https://github.com/cvisionai/tracker_classification # Name or pip package or URL function: tracker_rules.angle.classify_track #Function to use to classify tracks args: # keyword arguments specific to classify method minimum_length: 50 label: Direction names: Entering: [285, 360] Entering: [0,75] Exiting: [105,265] Unknown: [0,360] |

Classification plugins¶

An example classification plugin project can be found here on github. The example aligns

with the sample strategy above. media_id is a dictionary representing a media element proposed_track_element is a list of detection objects.

Full definition of the dictionary and detection object is implementation specific, for tator-backed deployments the definitions apply for Media and detections. At a minimum the media dictionary must supply a width and height of the media. Each detection must have at a minimum an x,y,height,width each in relative coordinates (0.0 to 1.0). Additional properties for each detection may be present in a given detection attributes object.

1 2 3 4 5 | def classify_track(media_id, proposed_track_element, minimum_length=2, label='Label', names={}): |

Example Tator Workflow¶

In its entirety a reference tator workflow is supplied.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 | apiVersion: argoproj.io/v1alpha1 kind: Workflow metadata: generateName: tracker-example spec: entrypoint: pipeline ttlStrategy: SecondsAfterSuccess: 600 SecondsAfterFailure: 86400 volumeClaimTemplates: - metadata: name: workdir spec: storageClassName: aws-efs accessModes: [ "ReadWriteOnce" ] resources: requests: storage: 50Mi volumes: - name: dockersock hostPath: path: /var/run/docker.sock templates: - name: pipeline steps: - - name: worker template: worker - name: worker inputs: artifacts: - name: strategy path: /work/strategy.yaml raw: data: | method: iou-motion extension: method: linear-motion frame-diffs: [1,16,32,128] class-method: pip: git+https://github.com/cvisionai/tracker_classification function: tracker_rules.angle.classify_track args: minimum_length: 50 label: Direction names: Entering: [285, 360] Entering: [0,75] Exiting: [105,265] Unknown: [0,360] container: image: cvisionai/openem_lite:experimental imagePullPolicy: Always resources: requests: cpu: 4000m env: - name: TATOR_MEDIA_IDS value: "{{workflow.parameters.media_ids}}" - name: TATOR_API_SERVICE value: "{{workflow.parameters.rest_url}}" - name: TATOR_AUTH_TOKEN value: "{{workflow.parameters.rest_token}}" - name: TATOR_PROJECT_ID value: "{{workflow.parameters.project_id}}" - name: TATOR_PIPELINE_ARGS value: "{\"detection_type_id\": 65, \"tracklet_type_id\": 30, \"version_id\": 53, \"mode\": \"nonvisual\"}" volumeMounts: - name: workdir mountPath: /work command: [python3] args: ["/scripts/tator/tracker_entry.py"] |